Beautiful algorithms used in a compiler and a stack based virtual machine ~Part 4. How to compile functions~

Photo by Fernando Andrade on Unsplash

This is the December 25th article for Wantedly 2021 Advent Calendar.

This is the fourth part of the series "Beautiful algorithms used in a compiler and a stack based virtual machine". In this series, I share algorithms, which I found interesting while implementing a compiler in Go by following two books, "Writing an interpreter in Go" and "Writing a compiler in Go" by Thorsten Ball. The specification of the Monkey language is explained in this page.

In this post, I will focus on how functions are compiled and executed in Monkey. The scope includes execution of local bindings, which are variables defined/resolved in it. This topic is picked up in the seventh chapter of the book "Writing a compiler in Go".

I recommend that you read part 1 and 3 of this blog series. In part 1, the whole set of steps the compiler and the VM in Monkey take to execute the source code is introduced. In part 3, how to compile and execute global bindings are described. The concept of store introduced there wil be used again in this post.

- Part 1. Design of the Monkey compiler

- Part 2. How the Pratt parser in the Monkey compiler works

- Part 3. How to compile global bindings

- Part 4. How to compile functions (this post)

- Part 5. What Monkey does not have, but Java has

For readers who would like to see my completed compiler, please take a look at my github repository kudojp/MonkeyCompiler-Golang2021.

This post consists of five sections. In the first section, I will share the goal of this post. In the second section, I will show the interfaces of the compiler and the VM. They will be completed through the subsequent three sections.

§1. The goal of this post

The goal of this post is to share how Monkey compiles and executes the source code which includes the definitions and the calls of functions. The tokenizing step and the parsing step are not explained in this post. That is, the processes of the AST being converted into the bytecode and the bytecode being executed in the VM are picked up.

I will enable Monkey to execute and the source code like below.

global = 1

let my_function = fn(arg){

local = 2

return global + local + arg

}

my_function(3)In Monkey, functions are not defined as the function statement. Instead, they are defined as the function literal and let statement is used if it needs to be bound to the variable name.

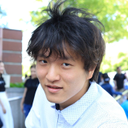

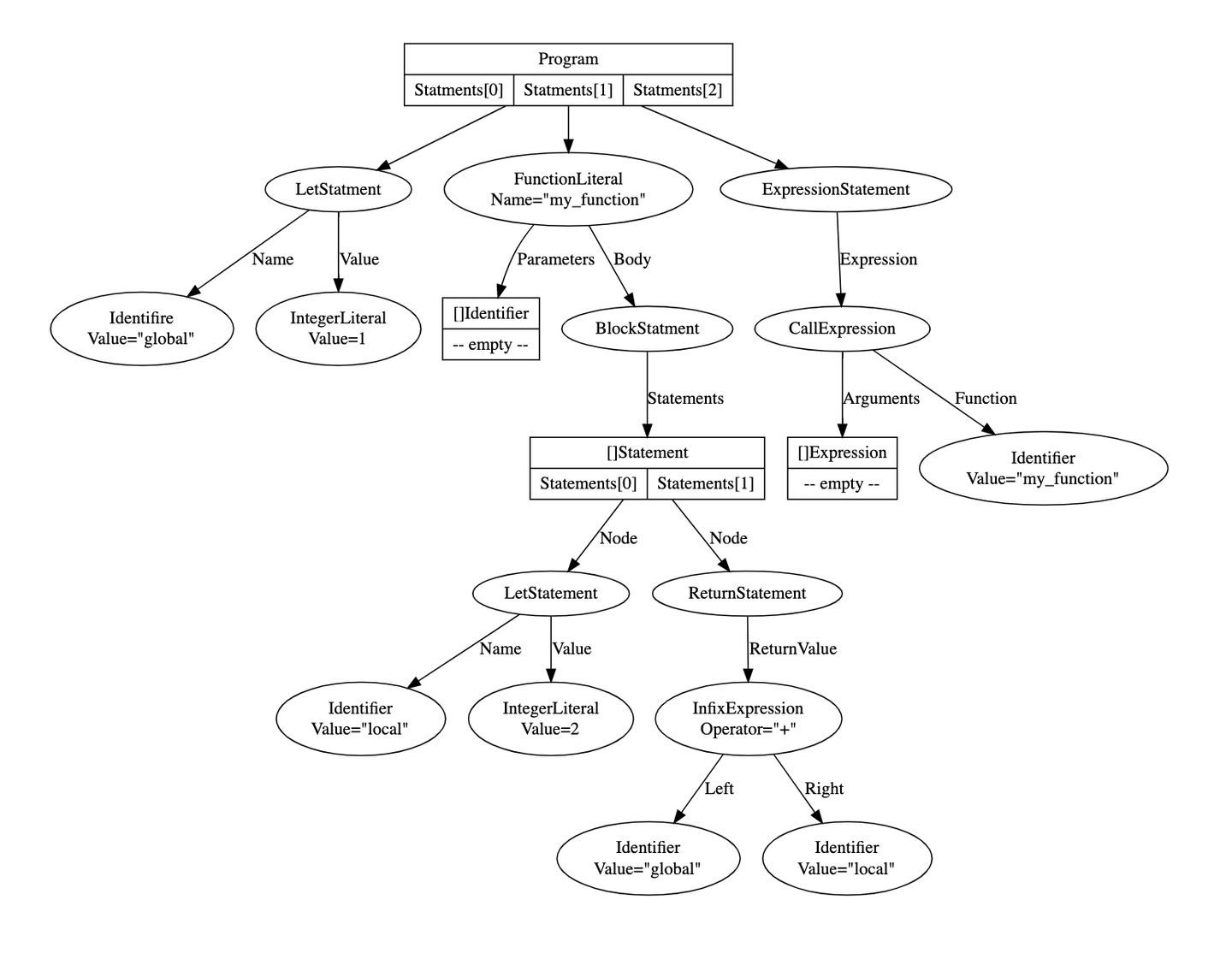

The AST equivalent to the source code above is this.

⬆️ Drawn with GrpahViz

The concept of the closure is out of the scope. Thus, the update to enable Monkey to execute a nested function literal (function literal defined in another function literal) is not explained in this post. If you are interested in it, please take a look at chapter nine of the book "Writing a compiler in Go" or PR#32 of kudojp/MonkeyCompiler-Golang2021.

For simplicity, the compiler implemented in this post assumes that the return value of the function is only one. Also, it is explicitly stated with the return keyword.

§2. Interfaces of the compiler and the VM

Snippets in this section are taken over from part 3 of this series. From the next section, I will update the snippets. The parts to be added in this post are denoted with TODO comments here in this section.

// compiler/compiler.go

func (c *Compiler) Compile(node ast.Node) {

switch node := node.(type) {

case *ast.Program:

for _, s := range node.Statements {

c.Compile(s)

}

case *ast.LetStatement:

symbol := c.symbolTable.Define(node.Name.Value)

c.Compile(node.Value)

c.emit(code.OpSetGlobal, symbol.Index)

case *ast.Identifier:

symbol, ok := c.symbolTable.Resolve(node.Value)

if !ok {

panic(fmt.Sprintf("undefined variable %s", node.Value))

}

c.emit(code.OpGetGlobal, symbol.Index)

case *ast.ExpressionStatement:

c.Compile(node.Expression)

c.emit(code.OpPop)

case *ast.IntegerLiteral:

integer := &object.Integer{Value: node.Value}

c.emit(code.OpConstant, c.addConstant(integer))

case *ast.InfixExpression:

c.Compile(node.Left)

c.Compile(node.Right)

switch node.Operator {

case "+":

c.emit(code.OpAdd)

// Omitted here: cases of other infix operators

default:

panic(fmt.Sprintf("unknown operator %s", node.Operator))

}

case *ast.FunctionLiteral:

// TODO

case *ast.BlockStatement:

// TODO

case *ast.ReturnStatement:

// TODO

case *ast.CallExpression:

// TODO

}

}

/*

Add instruction built from args to Compiler's instructions.

Returns the index of the newly added instruction.

*/

func (c *Compiler) emit(op code.Opcode, operands ...int) int {

ins := code.Make(op, operands...)

pos := c.addInstruction(ins)

c.setLastInstruction(op, pos)

return pos

}

/*

Append instruction to Compiler's instructions.

Returns the index of the newly added instruction.

*/

func (c *Compiler) addInstruction(ins []byte) int {

posNewInstruction := len(c.instructions)

c.instructions = append(c.instructions, ins...)

return posNewInstruction

}Interface of the VM is below. I will update the VM to understand the OpSetLocal/OpGetLocal instructions, which are introduced later.

// vm/vm.go

func (vm *VM) Run() {

ip := 0

for ip < len(vm.instructions)-1 {

ip += 1

op := code.Opcode(ins[ip])

switch op {

case code.OpConstant:

constIndex := code.ReadUint16(ins[ip+1:])

ip += 2

vm.push(vm.constants[constIndex])

case code.OpAdd:

vm.executeBinaryOperation(op) // See part 3 for the implementation.

case code.OpPop:

vm.pop()

case code.OpSetGlobal:

globalIndex := code.ReadUint16(ins[ip+1:])

ip += 2

vm.globals[globalIndex] = vm.pop()

case code.OpGetGlobal:

globalIndex := code.ReadUint16(ins[ip+1:])

ip += 2

vm.push(vm.globals[globalIndex])

case code.OpSetLocal:

// TODO

case code.OpGetLocal:

// TODO

}

}

}The upcoming updates will be in three steps. Firstly, I will enable the compiler to compile and the VM to execute a simple function which does not use bindings or arguments. Secondly, I will enable them to compile and execute a function with bindings in it. Lastly, I will enable them to compile and execute a function with both bindings and arguments.

Please note that the snippets in this section are simplified from the codebase kudojp/MonkeyCompiler-Golang2021. Even though the compiler and the VM implemented in this post execute a valid AST correctly, they are not guaranteed to throw compile/runtime errors as expected when an invalid AST is given.

§3. Compile a simple function (Step 1/3)

In this section, I enable Monkey to execute a simple function which uses neither bindings nor arguments in it. The updates in this section are added in PR#27 in the codebase kudojp/MonkeyCompiler-Golang2021.

I will use the source code below as an example.

let my_function = fn(){

return 1

}

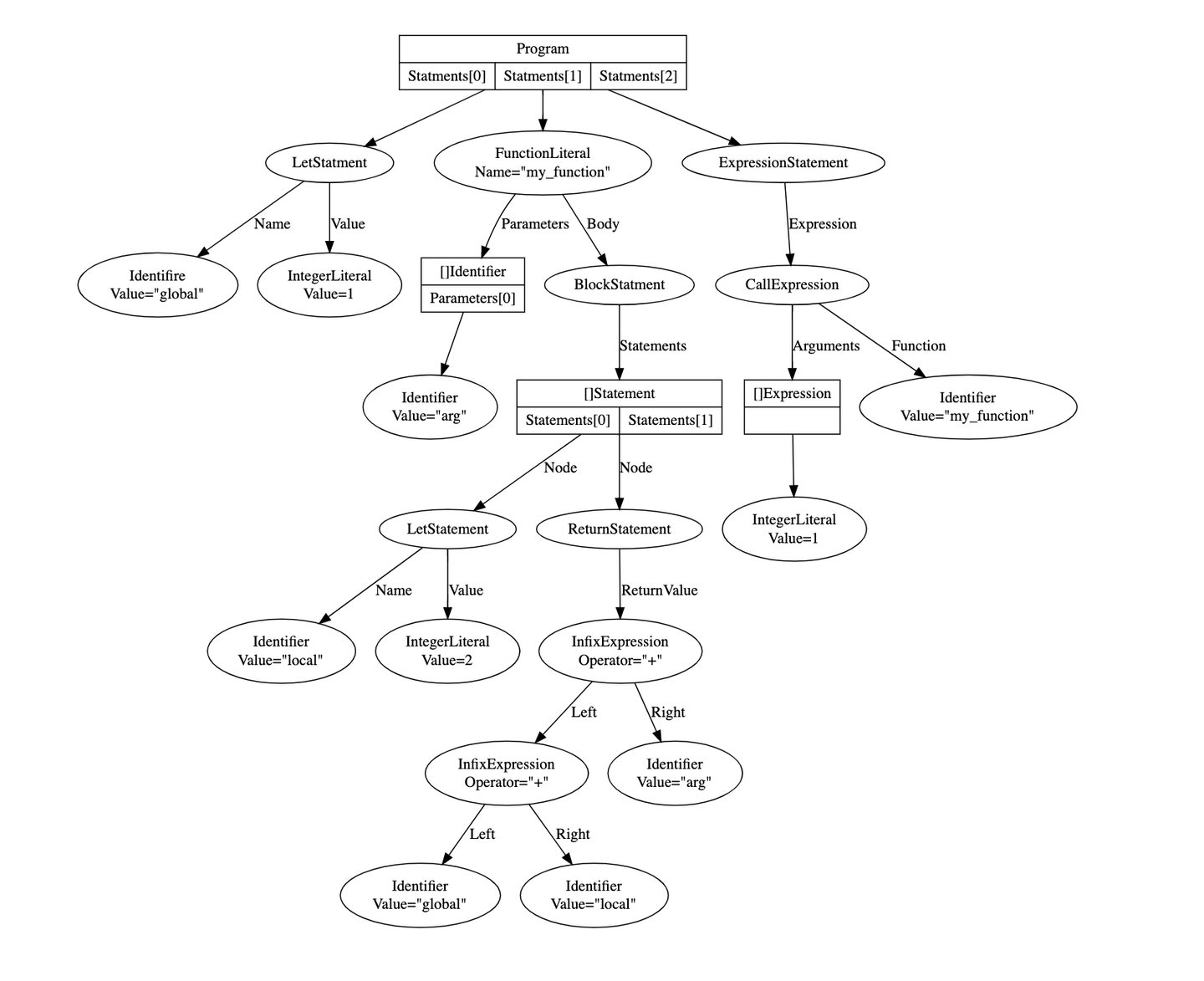

my_function()The AST which represents this source code is below.

⬆️ Drawn with GraphViz

Design

Before implementation, I will explain the design of how Monkey compiles and executes (1)the definition of a function and (2)the function call.

(1) The definition of a function is equivalent to the FunctionLiteral node in the AST. The FunctionLiteral node is compiled into the corresponding object, the same way as integer literals and strings are. The generated object is put in the constant pool in the bytecode. In the VM, it is referenced when it encounters an OpConstant instruction.

Below is the struct of the object converted from a function literal. It holds the instructions which correspond to the body of the function literal.

// object/object.go

type CompiledFunction struct {

Instructions code.Instructions

}(2) The function call is equivalent to the CallExpression node in the AST, which is compiled into an OpCall instruction. In the VM, when it encouters the OpCall instruction, it moves the context and start executing instructions held in CompiledFunction object which should be at the top of the data stack at the moment. (If the top object is not a CompiledFunction, the runtime error is thrown.) When the compiler encounters an OpReturnValue instruction, which should the last instruction held in the CompiledFunction object, the VM goes back and resume executing instructions which were being executed before the context move.

Implement the compiler

I will show the implementation of compiling the FunctionLiteral node, the BlockStatement node, the ReturnStatement node, and the CallExpression node below. To compile the FunctionLiteral node, I first have to introduce another concept.

// compiler/compiler.go

func (c *Compiler) Compile(node ast.Node) {

switch node := node.(type) {

// Omitted: other cases

case *ast.FunctionLiteral:

// TODO

case *ast.BlockStatement:

for _, s := range node.Statements {

c.Compile(s)

}

case *ast.ReturnStatement:

c.Compile(node.ReturnValue) // This object is left on the stack

c.emit(code.OpReturnValue)

case *ast.CallExpression:

c.Compile(node.Function)

c.emit(code.OpCall)

}

}I have to take care of the fact that the FunctionLiteral could be nested. To handle this, I introduce a struct CompilationScope in the compiler. The CompilationScope suggests in which scope the compiler is currently compiling, as the name suggests. It holds instructions the compiler has compiled so far in that scope.

// compiler/compiler.go

type CompilationScope struct {

instructions code.Instructions

}A new CompilationScope struct is created when the compiler compiles a new function literal nested in the current function literal. This newly created one is put on the stack named scopes. This logic is held in the enterScope method as below.

// compiler/compiler.go

type Compiler struct {

constants []object.Object

scopes []CompilationScope

scopeIndex int

}

func (c *Compiler) enterScope() {

scope := CompilationScope{

instructions: code.Instructions{},

}

c.scopes = append(c.scopes, scope)

c.scopeIndex++

}Conversely, after the compiler finishes compiling the function literal, it pops out the CompilationScope from scopes (stack). This logic is held in the leaveScope method as below. This method returns the whole set of instructions equivalent to the body of the function literal, whose compilation has been finished. This is used to instantiate the CompiledFunction object in the previous scope. The instantiated object will be put in the constant pool.

// compiler/compiler.go

func (c *Compiler) leaveScope() code.Instructions {

instructions := c.currentInstructions()

c.scopes = c.scopes[:len(c.scopes)-1]

c.scopeIndex--

return instructions

}To append instructions to the current CompilationScope's instructions, I update the emit method as below. In this way, the compiler can switch the scopes where emitted instructions are appended.

// compiler/compiler.go

/*

Build an instruction from args and append it to current to CompilerScope's instructions.

Returns an index of the newly added opcode.

*/

func (c *Compiler) emit(op code.Opcode, operands ...int) int {

ins := code.Make(op, operands...)

pos := c.addInstruction(ins)

c.setLastInstruction(op, pos)

return pos

}

/*

Append instruction to current CompilerScope's instructions.

Returns an index of the newly added opcode.

*/

func (c *Compiler) addInstruction(ins []byte) int {

posNewInstruction := len(c.currentInstructions())

updatedInstructions := append(c.currentInstructions(), ins...)

c.scopes[c.scopeIndex].instructions = updatedInstructions

return posNewInstruction

}By switching the CompilationScope, the compiler can compile nested functions ingeniously. This happens when the function literals are deeply nested.

When a new Compiler is instantiated, it should instantiate a new CompilationScope which corresponds the main scope and append it in the scopes stack.

// compiler/compiler.go

func New() *Compiler {

mainScope := CompilationScope{

instructions: code.Instructions{},

}

return &Compiler{

constants: []object.Object{},

scopes: []CompilationScope{mainScope},

scopeIndex: 0,

}

}With the update above, the compiler can compile a FunctionLiteral node in this way.

// compiler/compiler.go

func (c *Compiler) Compile(node ast.Node) {

switch node := node.(type) {

// Omitted: other cases

case *ast.FunctionLiteral:

c.enterScope()

c.Compile(node.Body)

instructions := c.leaveScope()

compiledFn := &object.CompiledFunction{Instructions: instructions}

c.emit(code.OpConstant, c.addConstant(compiledFn))

}

}Now I will implement the logic to compile a CallExpression node, which corresponds to the function call in the source code. The compiler emits the instruction to put the CompiledFunction object onto the data stack and then emits an OpCall instruction.

// compiler/compiler.go

func (c *Compiler) Compile(node ast.Node) {

switch node := node.(type) {

// Omitted: other cases

case *ast.CallExpression:

c.Compile(node.Function)

c.emit(code.OpCall)

}

}Implement the VM

The VM should work in these ways.

- When the VM encounters a FunctionCall instruction, it begins to execute the instructions held in the CompiledFunction object which is at the top of the data stack at the moment. If the object is not a CompiledFunction, it throws the runtime error.

- When the VM finishes executing all the instructions held in the CompiledFunction object, it leaves the object to be returned on the data stack and go back to where it was executing.

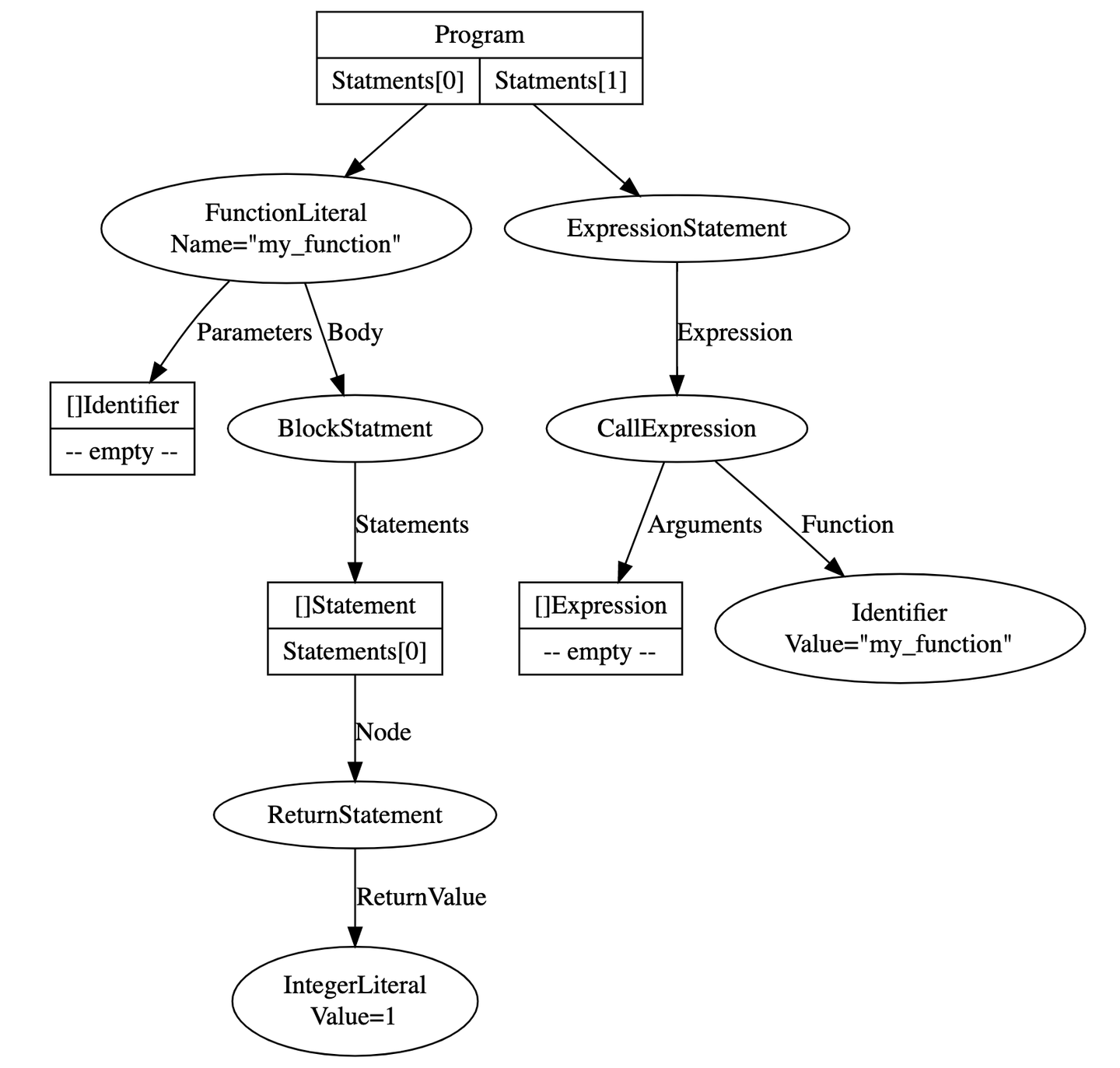

Considering that function calls could be nested, I introduce the concept of the frame and the call stack into the VM here. (Please note that in some programming languages, only one stack plays roles of both the call stack and the data stack.)

The frame corresponds to the one function call in the source code. In Monkey, the frame contains these two data.

- A CompiledFunction object, which holds the instructions compiled from the body of the corresponding function literal in the source code.

- An instruction pointer, which indicates the index of an instruction which should be executed next.

// vm/frame.go

type Frame struct {

fn *object.CompiledFunction

ip int

}

func (f *Frame) Instructions() code.Instructions {

return f.cl.Fn.Instructions

}The call stack is where the frames are pushed. Creating and pushing a new frame into the calls stack corresponds to calling a function in the source code. Popping the frame from the calls stack corresponds to the completion of the function execution. If function calls are executed in the nested manner in the source code, multiple frames are put on this stack in the VM.

I will update the VM to create a new frame and pushes it on the call stack named frames when the VM encounters an OpCall instruction.

// vm/vm.go

type VM struct {

constants []object.Object

stack []object.Object

sp int // Always point to the next value. Top of stack is stack[sp-1]

frames []*Frame

framesIndex int

}

func (vm *VM) currentFrame() *Frame {

return vm.frames[vm.framesIndex-1]

}

func (vm *VM) pushFrame(f *Frame) {

vm.frames[vm.framesIndex] = f

vm.framesIndex++

}When the VM finishes executing all the instructions held in the CompiledFunction of the current frame, it pops out the frame to execute the subsequent instructions in the previous frame.

// vm/vm.go

func (vm *VM) popFrame() {

vm.framesIndex--

}I update the Run method of the VM as below to execute instructions held in the current frame.

// vm/vm.go

func (vm *VM) Run() {

var ip int

var ins code.Instructions

var op code.Opcode

for vm.currentFrame().ip < len(vm.currentFrame().Instructions())-1 {

vm.currentFrame().ip++

ip = vm.currentFrame().ip

ins = vm.currentFrame().Instructions()

op = code.Opcode(ins[ip])

switch op {

case code.OpConstant:

constIndex := code.ReadUint16(ins[ip+1:])

vm.currentFrame().ip += 2

err := vm.push(vm.constants[constIndex])

if err != nil {

// Omitted: other cases are also updated in the same way.

}

}

}When a new VM is instantiated, it should have one frame corresponding to the main scope in the call stack (frames).

// vm/vm.go

func New(bytecode *compiler.Bytecode) *VM {

mainFn := &object.CompiledFunction{Instructions: bytecode.Instructions}

mainFrame := NewFrame(mainFn)

frames := make([]*Frame, MaxFrames)

frames[0] = mainFrame

return &VM{

constants: bytecode.Constants,

globals: make([]object.Object, GlobalsSize),

stack: make([]object.Object, StackSize),

sp: 0,

frames: frames,

framesIndex: 1,

}

}Now I complete the Run method of the VM.

When the VM encounters an OpCall instruction, it is the time to create and put a new frame onto the call stack (scopes). When the VM encounters an OpReturnValue instruction, it should pop the current frame with the return Value put on the data stack.

// vm/vm.go

func (vm *VM) Run() {

var ip int

var ins code.Instructions

var op code.Opcode

for vm.currentFrame().ip < len(vm.currentFrame().Instructions())-1 {

ip += 1

op := code.Opcode(ins[ip])

switch op {

case code.OpCall:

fn := vm.stack[vm.sp-1].(*object.CompiledFunction)

frame := NewFrame(fn)

vm.pushFrame(frame) // go into the context of the callee

case code.OpReturnValue:

returnValue := vm.pop()

vm.popFrame() // go back to context of the caller

vm.pop()

vm.push(returnValue) // leave the return value on the data stack

// Omitted: other cases to handle other opcodes.

}

}

}§4. Compile a function with bindings (Step 2/3)

In this section, I enable Monkey to execute a function with bindings in it. The updates in this section are added in PR#28 in the codebase kudojp/MonkeyCompiler-Golang2021.

The snippet below is the example.

global = 1

let my_function = fn(){

local = 2 // define a local binding

return global + local // resolve eglobal and local bindings

}

my_function()The AST which represents this source code is below.

⬆️ Drawn with GrpahViz

Design

The methodology to define and resolve global bindings was explained in part 3 of this series. The compiler uses a store to index global bindings in the source code. I extend this strategy to handle local bindings in the function. Also, I introduce new opcodes, OpSetLocal and OpGetLocal, in the bytecode and enable the VM to execute them like below.

- The compiler emits an OpSetLocal instruction when compiling the definition of a local variable in the function. The operand indicates the index of the local bindings in the same scope.

- The compiler emits an OpGetLocal instruction when compiling a resolution of a local variable. The operand indicates the index of the local bindings in the same scope.

- When the VM encounters an OpSetLocal instruction, it pops out an object from the locals store and pushes it onto the data stack. The operand indicates the index in the local store in the VM.

- When the VM encounters an OpGetLocal instruction, it copies an object from the local store and push it onto the data stack. The operand indicates the index in the local store.

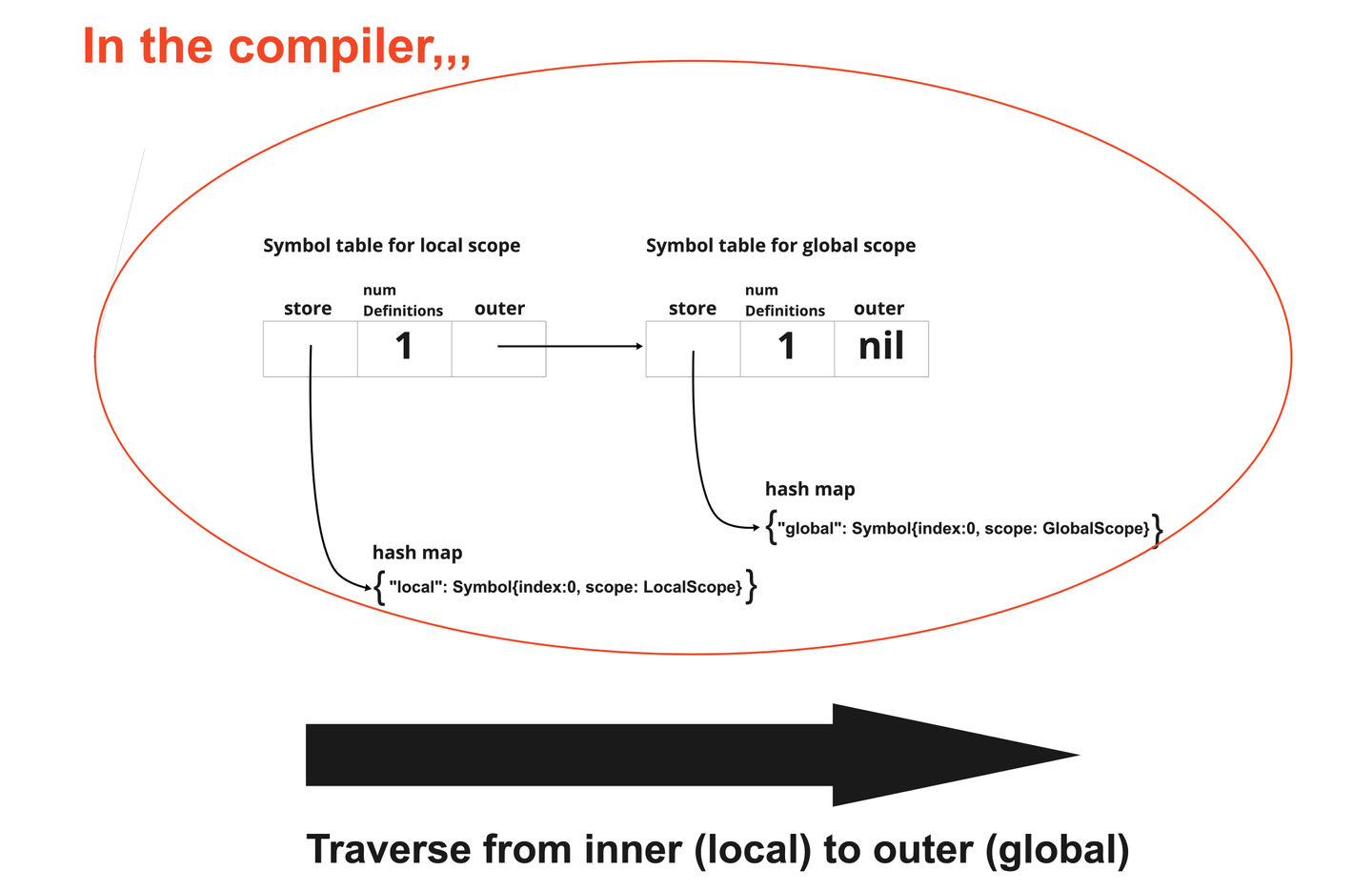

For global variables, the compiler uses the symbol table to index global variables, and the VM uses a hash map named globals store. In this step, I will apply these ideas to local bindings in these ways.

- I will extend the symbol table to enable resolving/defining both global and local bindings in the compiler.

- I will secure spaces used as the local store in the VM.

Implement the compiler

I will extend the symbol table to enable resolving/defining both global and local bindings in the compiler.

In the previous post, I introduced a concept of symbol table for indexing global variables. I extend this data store to handle indexing of variables in every scope. For that, I prepare a symbol table for each scope and link them to be a linked list. The head node (innermost table) is responsible for the local scope, and the tail node(outermost table) is for global scope. When function literal is nested as a closure, the linked list has more than two nodes.

In this post, a closure is out of scope. Thus, the requirement is to identify all variables used in the local scope by the combination of the scope(local or global) and the index.

That being said, SymbolTable is extended as below.

// compiler/symbol_table.go

type SymbolScope string

const (

GlobalScope SymbolScope = "GLOBAL"

LocalScope SymbolScope = "LOCAL"

)

type Symbol struct {

Name string

Scope SymbolScope

Index int

}

type SymbolTable struct {

Outer *SymbolTable

store map[string]Symbol

numDefinitions int

}When a new binding is defined, it should be indexed in the innermost table.

// compiler/symbol_table.go

func (s *SymbolTable) Define(name string) Symbol {

symbol := Symbol{Name: name, Index: s.numDefinitions}

if s.Outer == nil {

symbol.Scope = GlobalScope

} else {

symbol.Scope = LocalScope

}

s.store[name] = symbol

s.numDefinitions++

return symbol

}When resolving a binding, it should check from the inner table to the outer.

// compiler/symbol_table.go

func (s *SymbolTable) Resolve(name string) (Symbol, bool) {

obj, ok := s.store[name]

if s.Outer == nil || ok {

return obj, ok

}

return s.Outer.Resolve(name)

}The implementation of the symbol table is completed above.

When compiling a function literal, teh compiler creates a new scope struct. At this moment, a new table should be appended at the head of the list of tables.

// compiler/compiler.go

func (c *Compiler) enterScope() {

scope := CompilationScope{

instructions: code.Instructions{},

lastInstruction: EmittedInstruction{},

previousInstruction: EmittedInstruction{},

}

c.scopes = append(c.scopes, scope)

c.scopeIndex++

c.symbolTable = NewEnclosedSymbolTable(c.symbolTable) // New

}

func NewEnclosedSymbolTable(outer *SymbolTable) *SymbolTable {

s := NewSymbolTable()

s.Outer = outer

return s

}When leaving the scope, the compiler have to abondone a table at the head.

// compiler/compiler.go

func (c *Compiler) leaveScope() code.Instructions {

instructions := c.currentInstructions()

c.scopes = c.scopes[:len(c.scopes)-1]

c.scopeIndex--

c.symbolTable = c.symbolTable.Outer

return instructions

}Now, I implement the logic of compiling a LetStatement node, which corresponds to the definition of the binding in the source code.

// compiler/compiler.go

func (c *Compiler) Compile(node ast.Node) {

switch node := node.(type) {

// Omitted: other cases

case *ast.LetStatement:

c.Compile(node.Value)

symbol := c.symbolTable.Define(node.Name.Value)

if symbol.Scope == GlobalScope {

c.emit(code.OpSetGlobal, symbol.Index)

} else {

c.emit(code.OpSetLocal, symbol.Index)

}

}

}Also, I complete the implementation of compiling an Identifier node, which corresponds to the resolution of the binding in the source code.

// compiler/compiler.go

func (c *Compiler) Compile(node ast.Node) {

switch node := node.(type) {

// Omitted; other cases

case *ast.Identifier:

symbol, ok := c.symbolTable.Resolve(node.Value)

if !ok {

return fmt.Errorf("undefined variable %s", node.Value)

}

if symbol.Scope == GlobalScope {

c.emit(code.OpGetGlobal, symbol.Index)

} else {

c.emit(code.OpGetLocal, symbol.Index)

}

}

}Implement the VM

I will secure spaces used as the local store in the VM.

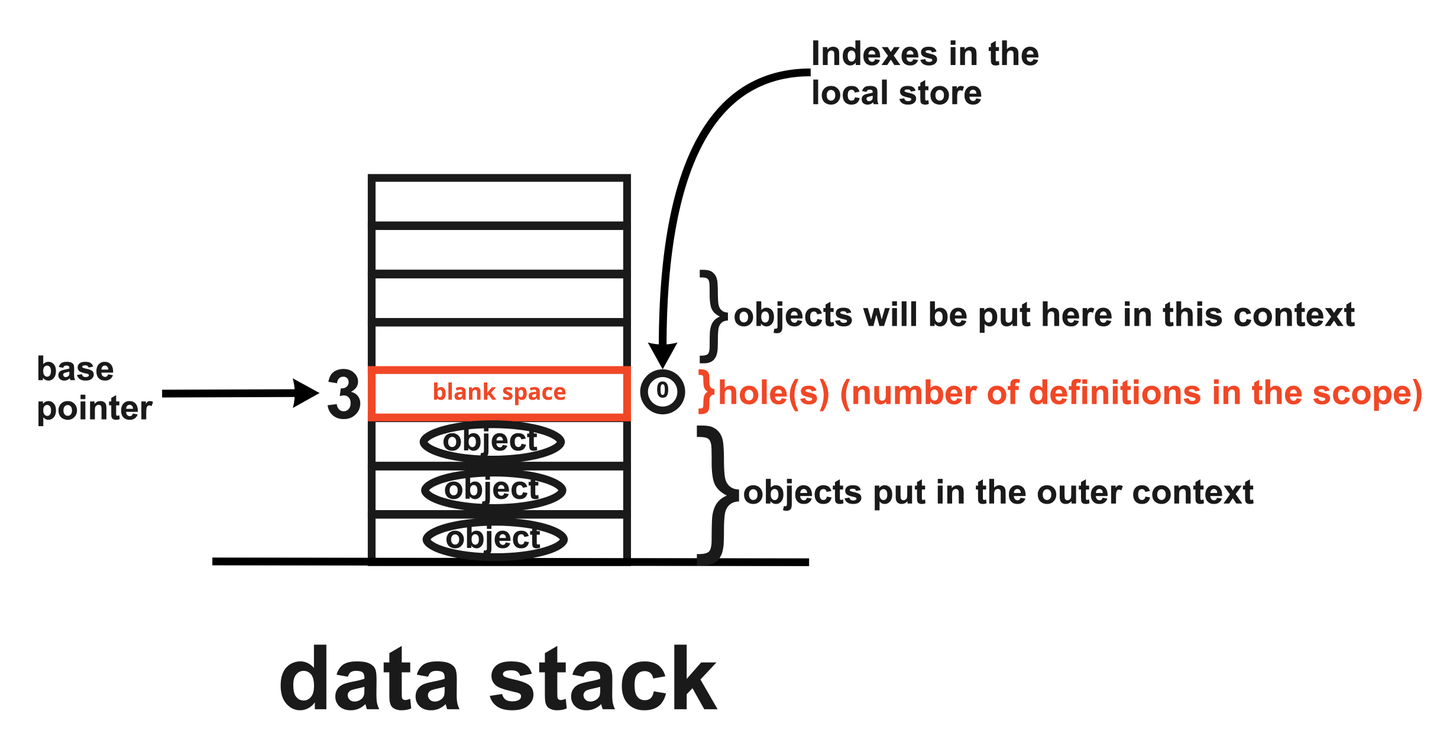

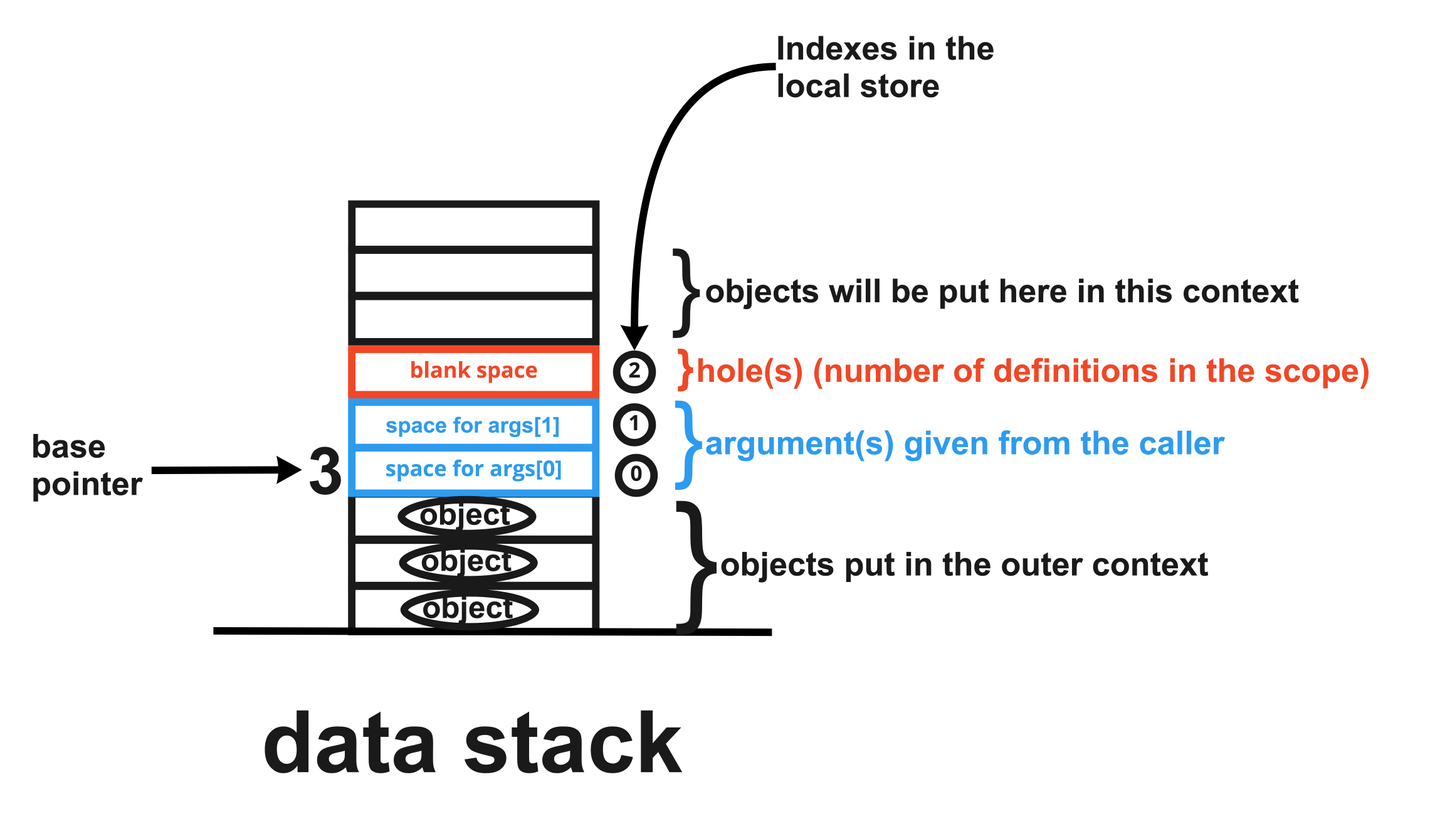

When the VM encounters an OpCallExpression instruction, it executes the function corresponding to the CompiledFunction object at the top of the stack. In this process, the VM uses some spaces in the data stack as the local store. Since the VM knows how many local bindings from the CompiledFunction object's numDefinitions field, it should secure that number of spaces in the data stack, as holes, from the base pointer. The base pointer points to the bottom of the stack of the current frame.

The index ( i ) indicated by an OpSetGlobal/OpGetGlobal instruction is mapped into ( base pointer + i ) in the data stack.

For this update, I have to include the basepointer field in the Frame struct.

// vm/frame.go

type Frame struct {

fn *object.CompiledFunction

ip int

basePointer int

}When the VM encounters the OpCall instruction, it creates a new call frame. The stack pointer in the new frame should skip spaces of the holes.

// vm/vm.go

func (vm *VM) Run() {

// Omitted

for vm.currentFrame().ip < len(vm.currentFrame().Instructions())-1 {

ip += 1

op := code.Opcode(ins[ip])

switch op {

case code.OpCall:

fn := vm.stack[vm.sp-1].(*object.CompiledFunction)

// The current stack pointer will be

// a base pointer of the next frame.

frame := NewFrame(fn, vm.sp)

vm.pushFrame(frame)

// skip spaces of the holes

vm.sp = frame.basePointer + fn.NumLocals

// Omitted: other cases to handle other opcodes.

}

}

}When the VM encounters the OpReturnValue instruction, it is the end of the function call. The VM moves the stack pointer back to where it was in the previous frame. It is one index lower than the current base pointer.

// vm/vm.go

func (vm *VM) Run() {

// Omitted

for vm.currentFrame().ip < len(vm.currentFrame().Instructions())-1 {

ip += 1

op := code.Opcode(ins[ip])

switch op {

case code.OpReturnValue:

returnValue := vm.pop()

frame := vm.popFrame()

// Revert the stack pointer after popping the last frame

vm.sp = frame.basePointer - 1

err := vm.push(returnValue)

// Omitted: other cases to handle other opcodes.

}

}

}I will enable the VM can now handle OpSetLocal/OpGetLocal instructions.

When the VM encounters an OpSetLocal instruction, the object at the top of the data stack should be popped out and then inserted in the local store. It is the space at index (index of base pointer + index indicated by the operand) in the data stack.

// vm/vm.go

func (vm *VM) Run() {

// Omitted

for vm.currentFrame().ip < len(vm.currentFrame().Instructions())-1 {

ip += 1

op := code.Opcode(ins[ip])

switch op {

case code.OpSetLocal:

localIndex := code.ReadUint8(ins[ip+1:])

vm.currentFrame().ip += 1

frame := vm.currentFrame()

vm.stack[frame.basePointer+int(localIndex)] = vm.pop()

// Omitted: other cases to handle other opcodes.

}

}

}When the VM encounters an OpGetLocal instruction, the object in the local store should be copied and put at the top of the data stack. The object to be copied at (index of base pointer + index indicated by the operand) in the data stack.

// vm/vm.go

func (vm *VM) Run() {

// Omitted

for vm.currentFrame().ip < len(vm.currentFrame().Instructions())-1 {

ip += 1

op := code.Opcode(ins[ip])

switch op {

case code.OpGetLocal:

localIndex := code.ReadUint8(ins[ip+1:])

vm.currentFrame().ip += 1

frame := vm.currentFrame()

vm.push(vm.stack[frame.basePointer+int(localIndex)])

// Omitted: other cases to handle other opcodes.

}

}

}§5. Compile a function with arguments (Step 3/3)

In this step, I enable Monkey to execute a function which takes at least one argument. The updates in this section is added in PR#30 in the codebase kudojp/MonkeyCompiler-Golang2021.

I will use the source code below as an example.

global = 1

// takes an argument

let my_function = fn(arg){

local = 2

return global + local + arg

}

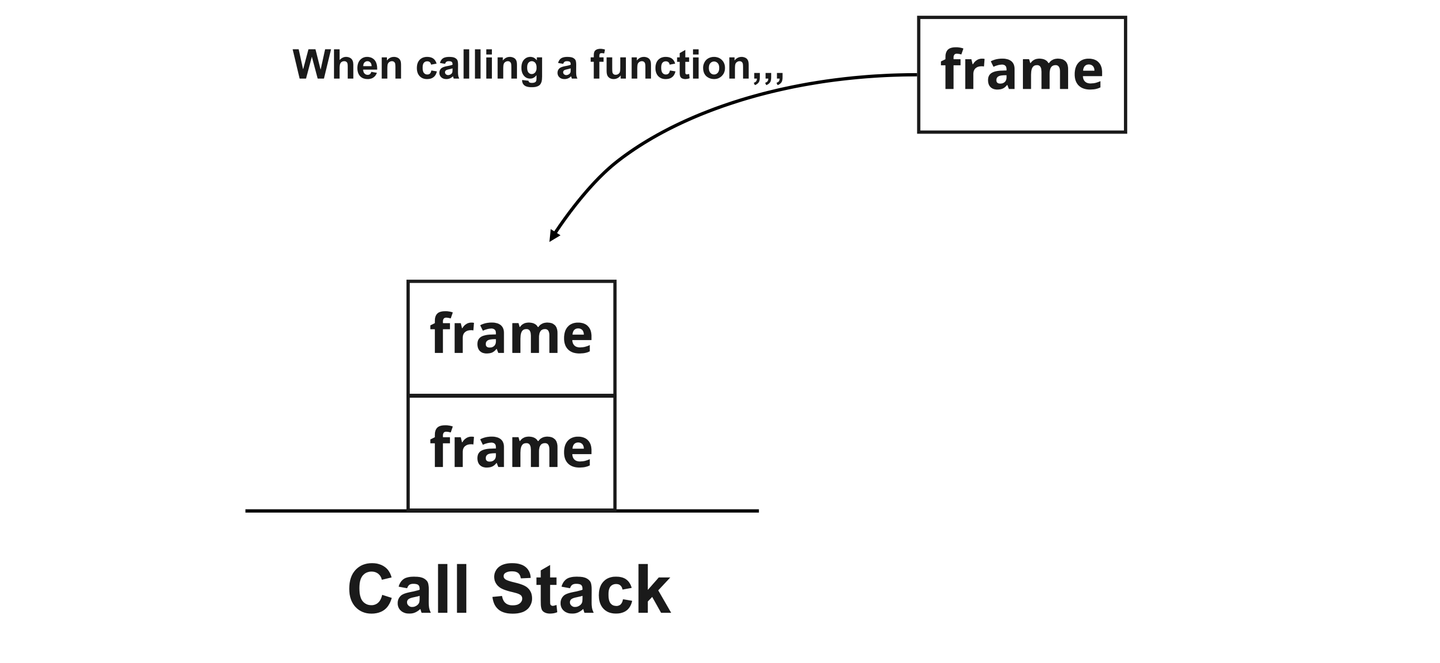

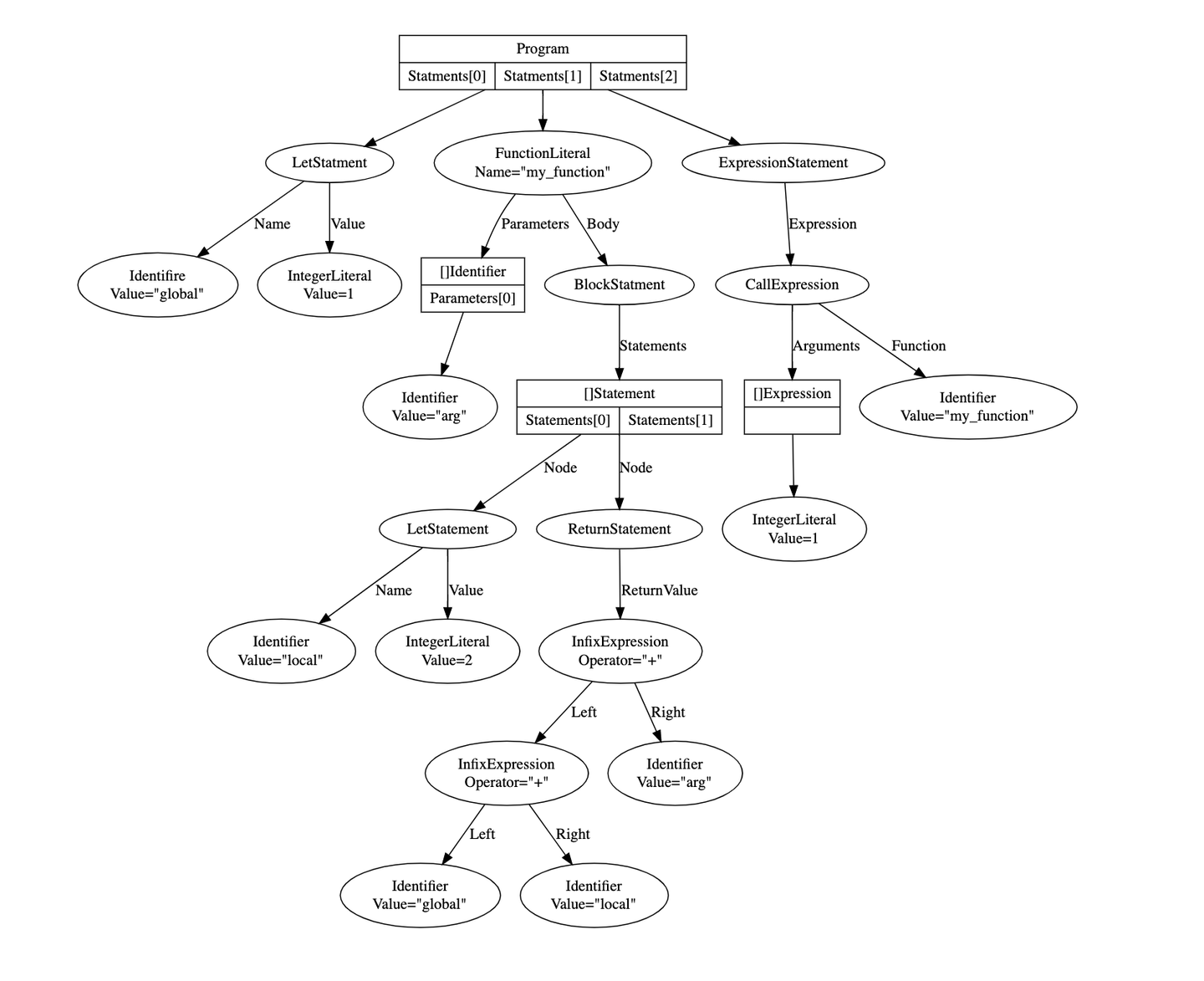

fn(3)This corresponds to the AST below.

⬆️ Drawn with GrpahViz

Design

In the VM, objects corresponding to arguments are passed through the data stack. After the next frame is put in the data stack in the VM, objects of arguments are pushed on the data stack. They are indexed as local binidings in the new frame.

Implent the compiler

When compiling a CallExpression node, the compiler emits the instructions to tell the VM to push objects corresponding to arguments onto the data stack. Then, the compiler emits an OpCall instruction.

To notify the VM of how many arguments are passed, I update the definition of the Opcall instruction to take one operand which indicates the number of arguments.

// code/code.go

OpCall: {"OpCall", []int{1}}I update instructions emitted from the compiler when it encounters a CallExpression node.

// compiler/compiler.go

func (c *Compiler) Compile(node ast.Node) {

switch node := node.(type) {

// Omitted: other cases

case *ast.CallExpression:

c.Compile(node.Function)

for _, a := range node.Arguments {

c.Compile(a)

}

c.emit(code.OpCall, len(node.Arguments))

}

}I update the process which is taken when compiling a FunctionLiteral node. Parameters have to be defined in the symbol table since they are local bindings.

// compiler/compiler.go

func (c *Compiler) Compile(node ast.Node) {

switch node := node.(type) {

// Omitted: other cases

case *ast.FunctionLiteral:

c.enterScope()

// New

for _, p := range node.Parameters {

c.symbolTable.Define(p.Value)

}

c.Compile(node.Body)

numLocals := c.symbolTable.numDefinitions

instructions := c.leaveScope()

compiledFn := &object.CompiledFunction{

Instructions: instructions,

NumLocals: numLocals,

NumParameters: len(node.Parameters),

}

c.emit(code.OpConstant, c.addConstant(compiledFn))

}

}Implement the VM

I update the process which the VM takes when encountering an OpCall instruction.

When the VM switches into the new frame, argument objects should be treated so that they are in the new local store, with the base pointer moved to point to the first argument object. They are acheived with this.

// vm/vm.go

func (vm *VM) Run() {

ip := 0

for ip < len(vm.instructions)-1 {

ip += 1

op := code.Opcode(ins[ip])

switch op {

case code.OpCall:

numArgs := code.ReadUint8(ins[ip+1:])

vm.currentFrame().ip += 1

vm.callFunction(int(numArgs))

}

}

}

func (vm *VM) callFunction(numArgs int) {

fn := vm.stack[vm.sp-1-numArgs].(*object.CompiledFunction)

if fn.NumParameters != numArgs {

panic("wrong number of arguments: want=%d, got=%d", fn.NumParameters, numArgs)

}

// The base pointer in the new frame should point to the object

// which corresponds to the first argument.

frame := NewFrame(fn, vm.sp-numArgs)

vm.pushFrame(frame)

vm.sp = frame.basePointer + fn.NumLocals

}Summary

In this post, I explained on how the compiler and the VM execute global bindings. When compiling, the compiler uses a hashmap named symbol table for indexing the global variable names in the source code. OpSetGlobal/OpGetGlobal instructions in the bytecode tell the VM to put/copy objects in the globals store. The position (index) in the store is specified by the operands.

At this version, Monkey cannnot execute nested functions. The updates to handle closures are added in the chapter nine of th book "Writing a compiler in Go".